- About Us

-

-

- About UsWe are the regional leaders in cybersecurity. We are masters in our tradecraft. We are dynamic, talented, customer-centric, laser-focused and our mission is to defend and protect our customers from cyber adversaries through advisory, consulting, engineering, and operational services.

-

-

- Solutions

-

-

- SolutionsThe cybersecurity industry is fragmented. We have carefully curated an interoperable suite of cybersecurity products and solutions that focus on improving your security compliance and risk maturity that add real business value, effectiveness, and ROI. Combined with our professional services and security engineering expertise we design, architect, implement and operate complex environments and protect your digital space.

-

- Industry

-

-

- IndustryCyber adversaries and threat actors have no boundaries. No industry is immune to cyber-attacks. Each industry has unique attributes and requirements. At DTS we have served all industry verticals since inception and have built specialization in each segment; to ensure our customers can operate with a high degree of confidence and assurance giving them a competitive advantage.

-

- Services

-

-

- ServicesOur cybersecurity services are unmatched in the region. With our unique customer-centric approach and methodology of SSORR we provide end-to-end strategic and tactical services in cybersecurity. We on-ramp, develop, nurture, build, enhance, operationalize, inject confidence, and empower our customers.

-

-

- Vendors

- Products

- Resources

- Press Center

- Tweets

- Support

- Contact

-

Sandboxing

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

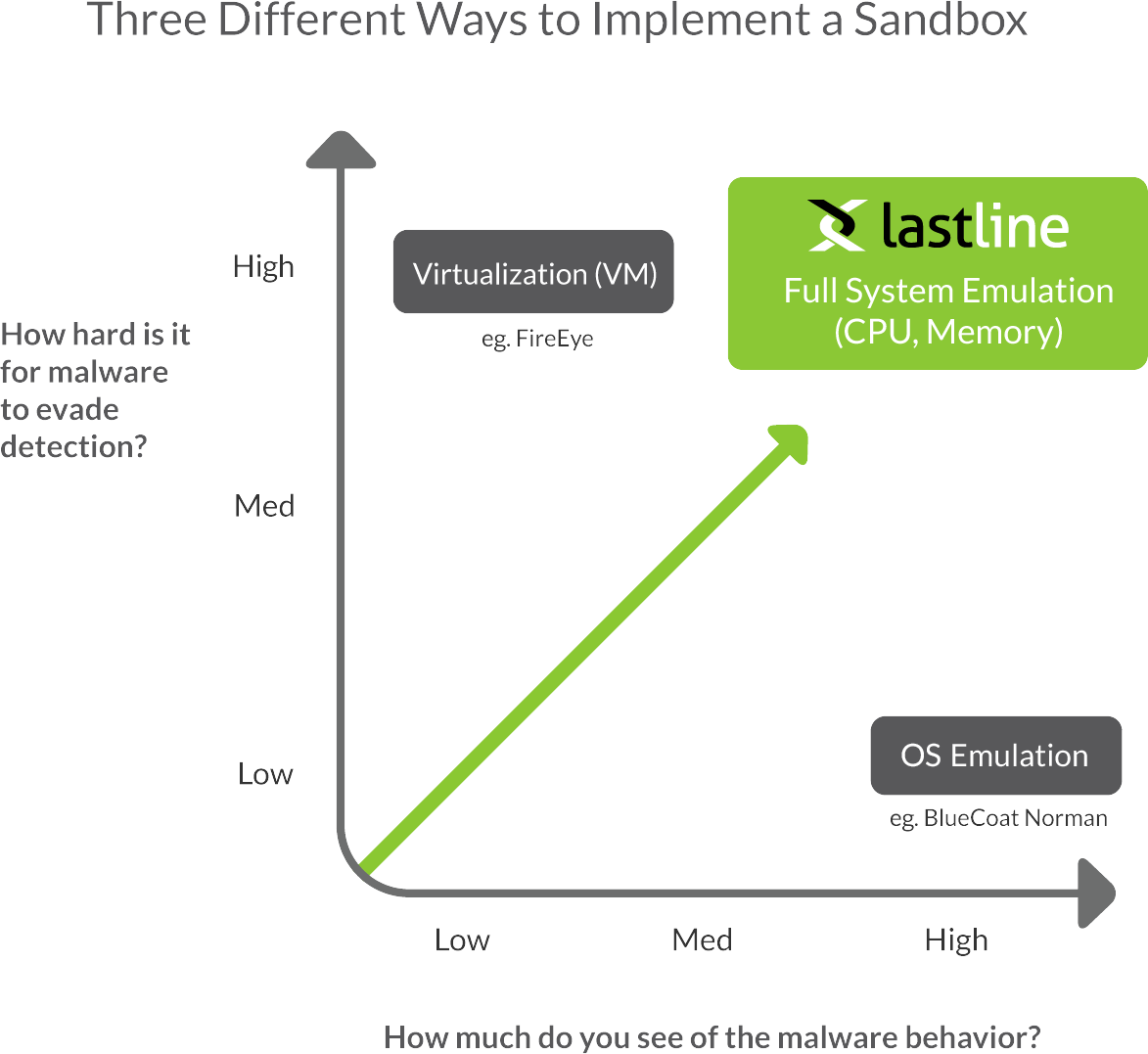

Now that we know what information we want to collect, the next question is how we can build a sandbox that can collect this data in a way that makes it difficult for malware to detect. The two main options are virtualization and emulation.

Next-Generation Sandbox Offers Comprehensive Detection of Advanced Malware

High-Resolution Security Analysis

- Virtualization: VM (virtual machine) based sandboxing can look at suspicious programs that are contained within virtual machines. This approach provides the lowest level of visibility into relevant malware behaviors, but it is harder for sophisticated malware to evade detection.

- OS Emulation of the operating system provides a high level of visibility into malware behaviors. Unfortunately, this approach is the easiest for advanced malware to detect and evade.

- Full System Emulation, where the emulator simulates the physical hardware (including CPU and memory), provides the deepest level of visibility into malware behavior, and it is also the hardest for advanced malware to evade.

Next-Generation Sandbox Offers Comprehensive Detection of Advanced Malware

High-Resolution Security Analysis

- Virtualization: VM (virtual machine) based sandboxing can look at suspicious programs that are contained within virtual machines. This approach provides the lowest level of visibility into relevant malware behaviors, but it is harder for sophisticated malware to evade detection.

- OS Emulation of the operating system provides a high level of visibility into malware behaviors. Unfortunately, this approach is the easiest for advanced malware to detect and evade.

- Full System Emulation, where the emulator simulates the physical hardware (including CPU and memory), provides the deepest level of visibility into malware behavior, and it is also the hardest for advanced malware to evade.

Building an effective Sandbox

Automated malware analysis systems (or sandboxes) are one of the latest weapons in the arsenal of security vendors. Such systems execute an unknown malware program in an instrumented environment and monitor their execution. While such systems have been used as part of the manual analysis process for a while, they are increasingly used as the core of automated detection processes. The advantage of the approach is clear: It is possible to identify previously unseen (zero day) malware, as the observed activity in the sandbox is used as the basis for detection.

Goals of a dynamic analysis system (sandbox)

Second, a sandbox has to perform monitoring in a fashion that makes it difficult to detect. Otherwise, it is easy for malware to identify the presence of the sandbox and, in response, alter its behavior to evade detection. The third goal captures the desire to run many samples through a sandbox, in a way that the execution of one sample does not interfere with the execution of subsequent malware programs. Also, scalability means that it must be possible to analyze many samples in an automated fashion.

What information should a sandbox collect?

When monitoring the behavior of a user mode process, almost all sandboxes look at the system call interface or the Windows API. System calls are functions that the operating system exposes to user mode processes so that they can interact with their environment and get stuff done, such as reading from files, sending packets over the network, and reading a registry entry on Windows. Monitoring system calls (and Windows API function calls) makes sense, but it is only one piece of the puzzle. The problem is that a sandbox that monitors only such invocations is blind to everything that happens in between these calls. That is, a sandbox might see that a malware program reads from a file, but it cannot determine how the malware actually processes the data that it has just read. A lot of interesting information can be gathered from looking deeper into the execution of a program. Thus, some sandboxes go one step further than just hooking function calls (such as system calls or Windows API functions), and also monitor the instructions that a program executes between these invocations.

Emulation versus virtualization

With virtualization, the guest program P actually runs on the underlying hardware. The virtualization software (the hypervisor) only controls and mediates the accesses of different programs (or different virtual machines) to the underlying hardware. In this fashion, the different virtual machines are independent and isolated from each other. However, when a program in a virtual machine is executing, it is occupying the actual physical resources, and as a result, the hypervisor (and the malware analysis system) cannot run simultaneously. This makes detailed data collection challenging. Moreover, it is hard to entirely hide the hypervisor from the prying eyes of malware programs. The advantage is that programs in virtual machines can run at essentially native speed.

Leveraging emulation and virtualization for malware analysis

Virtualization platforms provides significantly fewer options for collecting detailed information. The easiest way is to record the system calls that programs perform. This can be done in two different ways. First, one could instrument the guest operating system. This has the obvious drawback that a malware program might be able to detect the modified OS environment. Alternatively, one can perform system call monitoring in the hypervisor. System calls are privileged operations. Thus, when a program in a guest VM performs such an operation, the hypervisor is notified. At this point, control passes back to the sandbox, which can then gather the desired data. The big challenge is that it is very hard to efficiently record the individual instructions that a guest process executes without being detected. After all, the sandbox relinquishes control to this process between the system calls. This is a fundamental limitation for any sandbox that uses virtualization technology.

How is Lastline Sandbox built?

The second question is about performance. Isn’t emulation terribly slow? The answer is yes, if implemented in a naive way. If we emulated every instruction in software, the system would indeed not scale very well. However, we have done many clever things to speed up emulation, to a level where it is (almost) as fast as native execution. For example, one does not need to emulate all code. A lot of code can be trusted, such as Windows itself. Well, we can trust the kernel most of the time – of course, it can be compromised by rootkits. Only the malicious program (and code that this program interacts with) needs to be analyzed in detail. Also, one can perform dynamic translation. With dynamic translation, every instruction is examined in software once, and then translated into a much more efficient form that can be run directly.

Summary

A sandbox offers the promise of zero day detection capabilities. As a result, most security vendors offer some kind of sandbox as part of their solutions. However, not all sandboxes are alike, and the challenge is not to build a sandbox, but rather to build a good one. Most sandboxes leverage virtualization and rely on system calls for their detection. This is not enough, since these tools fundamentally miss a significant amount of potentially relevant behaviors. Instead, we believe that a sandbox must be an analysis platform that sees all instructions that a malware program executes, thus being able to see and react to attempts by malware authors to fingerprint and detect the runtime environment. As far as we know, Lastline is the only vendor that uses a sandbox based on system emulation, combining the visibility of an emulator with the resistance to detection (and evasion) that one gets from running the malware inside the real operating system.

Building an effective Sandbox

Goals of a dynamic analysis system (sandbox)

Second, a sandbox has to perform monitoring in a fashion that makes it difficult to detect. Otherwise, it is easy for malware to identify the presence of the sandbox and, in response, alter its behavior to evade detection. The third goal captures the desire to run many samples through a sandbox, in a way that the execution of one sample does not interfere with the execution of subsequent malware programs. Also, scalability means that it must be possible to analyze many samples in an automated fashion.

What information should a sandbox collect?

Emulation versus virtualization

With virtualization, the guest program P actually runs on the underlying hardware. The virtualization software (the hypervisor) only controls and mediates the accesses of different programs (or different virtual machines) to the underlying hardware. In this fashion, the different virtual machines are independent and isolated from each other. However, when a program in a virtual machine is executing, it is occupying the actual physical resources, and as a result, the hypervisor (and the malware analysis system) cannot run simultaneously. This makes detailed data collection challenging. Moreover, it is hard to entirely hide the hypervisor from the prying eyes of malware programs. The advantage is that programs in virtual machines can run at essentially native speed.

Leveraging emulation and virtualization for malware analysis

Virtualization platforms provides significantly fewer options for collecting detailed information. The easiest way is to record the system calls that programs perform. This can be done in two different ways. First, one could instrument the guest operating system. This has the obvious drawback that a malware program might be able to detect the modified OS environment. Alternatively, one can perform system call monitoring in the hypervisor. System calls are privileged operations. Thus, when a program in a guest VM performs such an operation, the hypervisor is notified. At this point, control passes back to the sandbox, which can then gather the desired data. The big challenge is that it is very hard to efficiently record the individual instructions that a guest process executes without being detected. After all, the sandbox relinquishes control to this process between the system calls. This is a fundamental limitation for any sandbox that uses virtualization technology.

How is Lastline Sandbox built?

The second question is about performance. Isn’t emulation terribly slow? The answer is yes, if implemented in a naive way. If we emulated every instruction in software, the system would indeed not scale very well. However, we have done many clever things to speed up emulation, to a level where it is (almost) as fast as native execution. For example, one does not need to emulate all code. A lot of code can be trusted, such as Windows itself. Well, we can trust the kernel most of the time – of course, it can be compromised by rootkits. Only the malicious program (and code that this program interacts with) needs to be analyzed in detail. Also, one can perform dynamic translation. With dynamic translation, every instruction is examined in software once, and then translated into a much more efficient form that can be run directly.

Summary

A sandbox offers the promise of zero day detection capabilities. As a result, most security vendors offer some kind of sandbox as part of their solutions. However, not all sandboxes are alike, and the challenge is not to build a sandbox, but rather to build a good one. Most sandboxes leverage virtualization and rely on system calls for their detection. This is not enough, since these tools fundamentally miss a significant amount of potentially relevant behaviors. Instead, we believe that a sandbox must be an analysis platform that sees all instructions that a malware program executes, thus being able to see and react to attempts by malware authors to fingerprint and detect the runtime environment. As far as we know, Lastline is the only vendor that uses a sandbox based on system emulation, combining the visibility of an emulator with the resistance to detection (and evasion) that one gets from running the malware inside the real operating system.

Solutions

Network and Infrastructure Security

Zero Trust and Private Access

Endpoint and Server Protection

Vulnerability and Patch Management

Data Protection

Application Security

Secure Software and DevSecOps

Cloud Security

Identity Access Governance

Governance, Risk and Compliance

Security Intelligence Operations

Incident Response

Accreditations

Accreditations

Dubai

Office 7, Floor 14

Makeen Tower, Al Mawkib St.

Al Zahiya Area

Abu Dhabi, UAE

Sama Tower, Floor 7

Moh. Thunayan AlGhanim Str.

Jibla, Kuwait City

Kuwait

160 Kemp House, City Road

London, EC1V 2NX

United Kingdom

Company Number: 10276574

Riyadh

Office 109, Aban Center

King Abdulaziz Road

Al Ghadir

Riyadh, Saudi Arabia

The website is our proprietary property and all source code, databases, functionality, software, website designs, audio, video, text, photographs, icons and graphics on the website (collectively, the “Content”) are owned or controlled by us or licensed to us, and are protected by copyright laws and various other intellectual property rights. The content and graphics may not be copied, in part or full, without the express permission of DTS Solution LLC (owner) who reserves all rights.

DTS Solution, DTS-Solution.com, the DTS Solution logo, HAWKEYE, FYNSEC, FRONTAL, HAWKEYE CSOC WIKI and Firewall Policy Builder are registered trademarks of DTS Solution, LLC.